Radiance Surfaces: Optimizing Surface Representations with a 5D Radiance Field Loss

SIGGRAPH 2025 (Conference Track)

Abstract

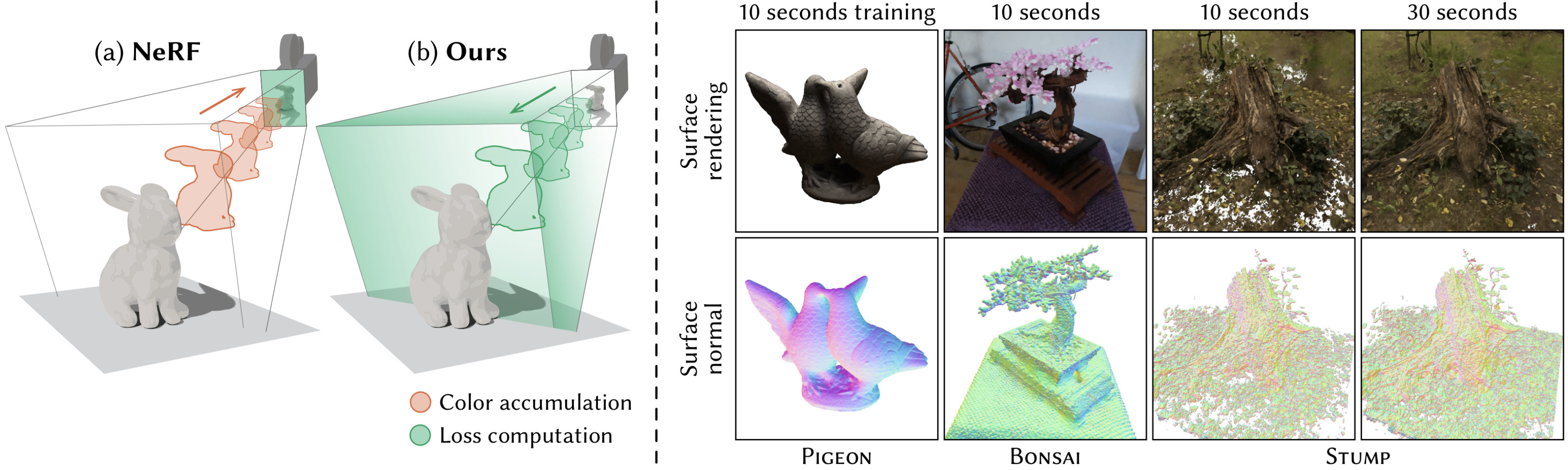

We present a fast and simple technique to convert images into a radiance surface-based scene representation. Building on existing radiance volume reconstruction algorithms, we introduce a subtle yet impactful modification of the loss function requiring changes to only a few lines of code: instead of integrating the radiance field along rays and supervising the resulting images, we project the training images into the scene to directly supervise the spatio-directional radiance field. The primary outcome of this change is the complete removal of alpha blending and ray marching from the image formation model, instead moving these steps into the loss computation. In addition to promoting convergence to surfaces, this formulation assigns explicit semantic meaning to 2D subsets of the radiance field, turning them into well-defined radiance surfaces. We finally extract a level set from this representation, which results in a high-quality radiance surface model. Our method retains much of the speed and quality of the baseline algorithm. For instance, a suitably modified variant of Instant NGP maintains comparable computational efficiency, while achieving an average PSNR that is only 0.1 dB lower. Most importantly, our method generates explicit surfaces in place of an exponential volume, doing so with a level of simplicity not seen in prior work.

External project pageDownloads

Publication

Code

BibTeX Reference

@inproceedings{Zhang2025RadianceSurfaces,

author = {Zhang, Ziyi and Roussel, Nicolas and Muller, Thomas and Zeltner, Tizian and Nimier-David, Merlin and Rousselle, Fabrice and Jakob, Wenzel},

title = {Radiance Surfaces: Optimizing Surface Representations with a 5D Radiance Field Loss},

year = {2025},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

doi = {10.1145/3721238.3730713},

booktitle = {Proceedings of the Special Interest Group on Computer Graphics and Interactive Techniques Conference Conference Papers},

articleno = {21},

numpages = {10},

series = {SIGGRAPH Conference Papers '25}

}